Set Up Jenkins CI Pipelines on Kubernetes: Easy Guide

Learn to deploy Jenkins CI on Kubernetes for scalable and efficient continuous integration workflows

Published on:

Oct 30, 2015Last updated on:

Jul 17, 2025Continuous Integration Introduction

Continuous Integration (CI) is the development practice of having a build trigger when you push code to a repository. The build is then tested, and if the tests pass it is allowed to be merged. This helps to enforce that any new code won’t cause regressions, and also that any new features have matching tests. Jenkins is an open source implementation of a CI server, written in Java.

CI is a standard part of a DevOps workflow. As we run many services on Kubernetes, why would we have a build server sitting there idle when we could spin one up on demand?

Containerising Jenkins Master

Kubernetes runs Docker containers. While there’s nothing stopping you from deploying the official Jenkins image, that won’t get you dynamically provisioned jenkins slaves! For that you will need to roll your own container, including the Kubernetes plugin. Other customisations we use include groovy scripts that automatically configure the Jenkins master to integrate with our GitLab repository and configure the Kubernetes plugin from environment variables - meaning we can re-use the container on any cluster without rebuilding it.

Jenkins Slave Container

As we want our Jenkins slave to be able to build anything, but still be a fairly small container by itself, we use the

“Docker in Docker” pattern we talked about in a previous blog

Build Docker Containers Inside Docker: A Quick Guide post.

This means that the slave container runs a container inside it where all building takes place.

Having a build container is fairly common practice as a means of

resolving dependency hell - once you’ve created a working build environment on your laptop, you can push the whole thing

to your build server. The Jenkins slave container can then be small and simple - it contains docker, DinD, the Java

runtime environment and a Jenkins slave jar.

Jenkins Final Implementation Steps

There are 2 ways you could run your Jenkins Slaves on Kubernetes. Firstly, you could deploy them as a standard

ReplicationController. This means they will sit there (always running) and build when a job is pushed to them.

The second way, which we use, is to create a Kubernetes Pod per build, which runs once, pushes the build artefact,

and then exits. Depending on the number of builds you have going at once, either method has advantages, we just prefer

to not have random Java containers hanging around eating resources!

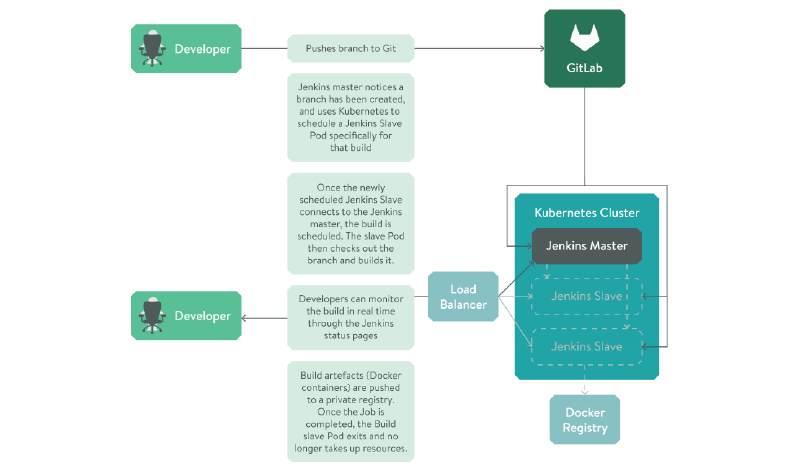

Overall, our CI workflow looks something like this:

Figure - CI workflow diagram

If you’ve set up something similar, let us know through LiveWyer Contact page!